Sep 27, 2020 0

Engineering lessons learnt at mbr

I’ve left the company I was previously working for at the end of 2018. In this post I want to reflect on some technical things I learnt there which I found really valuable.

- Have a style guide and enforce it with a linter

A linter is a program which checks that your code follows certain style rules. You can e.g. hook this up to the CI or as a pre-commit hook. It should not be possible to merge if the style is violated. It’s common to catch quite some bugs early this way. - Rebase heavily, group commits semantically.

Don’t e.g. put a refactoring change in a commit with a bug fix. Split commits if they contain multiple semantically different changes, reorganize a PR until it fits semantically. Don’t squash on merge, but rather keep individual commits to retain this semantic order in the master branch.This is how Git works best. It makes it a lot easier to e.g. track bugs. This is because the commit message explains what the intention behind the change was and one can verify that the commit does exactly this and nothing else. Also you can use git internal tooling (like

git bisect). - Do not squash on commit

This enables the possibility to prefix a commit with something likeRevert me: Your message. This commit can easily begit revert-ed later on to only revert this specific change. We used this often to merge temporary fixes, which were supposed to be ephemeral. By indicating the short-lived intention of this change it’s clearly stated that this needs to be removed again. Also, you can always search for the correspondingRevert: "Revert Me: Your message"commit, to verify that something was actually thrown out again. - Use

git blame

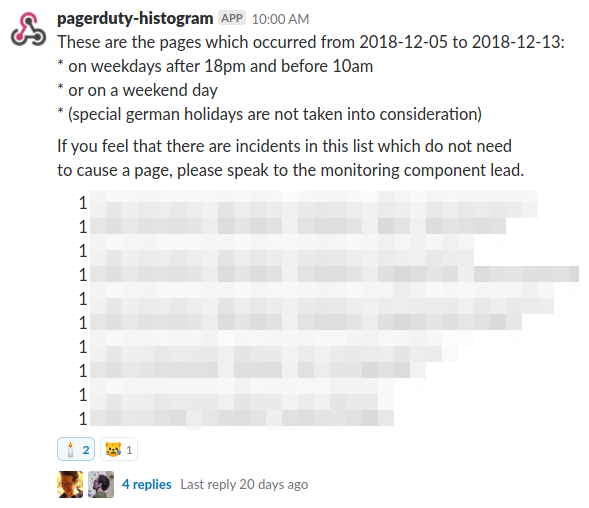

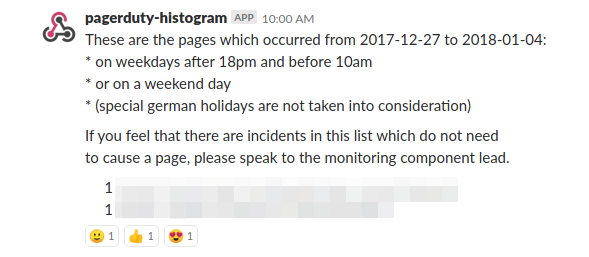

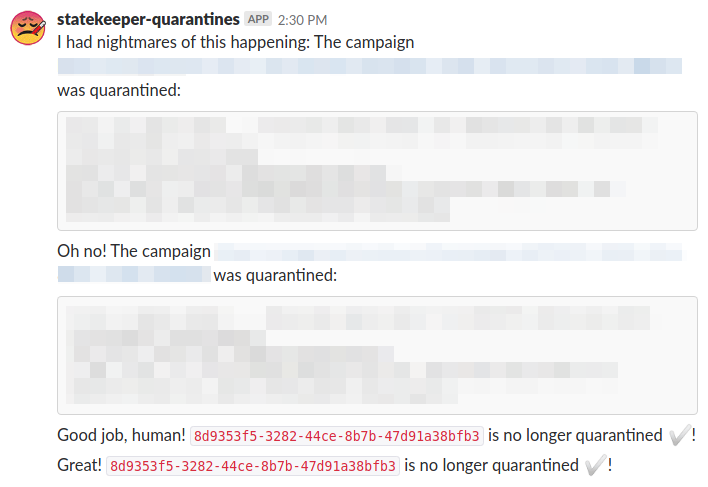

…to find out about why something was merged (works only when the commit message describes the intention). I used this often to look up the original PR and read through the discussion there to better understand why a decision was made (GitHub links the originating PR in the blame view). - Make errors visible

In the case of an error rather than continuing to run and fallback on some default behavior it can oftentimes be much better to just let the program crash and report the error. Otherwise you quickly run into the case where errors happen, but are not recognized or acted properly upon. And oftentimes the default behavior you defined might be suitable for one class of errors, without you having thought about other error classes which can also happen. - Return early

Don’t do something likeif foo { bar(); } else { return ...; }instead do

if !foo return ...; bar();Of all style guide things this is a really significant one that makes code a lot clearer (and keeps the indentation levels low).

- Most of the time spent when implementing something is reading code

- Rollbacks should be easy

This refers to deployments. At mbr it was quite easy to build an arbitrary commit and deploy it. This makes you feel a lot better about changes where it’s not entirely clear if they might cause issues. - A feature commit must contain a test for that feature

- A bug fix should contain a test which would have catched the bug

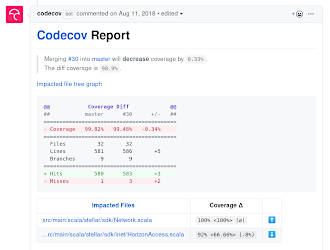

Another thing which we didn’t do at mbr, but which I find highly valuable is to put a lot of effort on test coverage. A number of open source projects have integrated e.g. codecov.io into their CI pipeline in a way that it’s only possible to merge if the test coverage stays either the same or goes up.